FDE-Hike

Data engineering project, aiming to analyze the similarities between different hikings.

Project repository.

The technical objective of the project focuses on hiking trails. It aims to analyze the similarities between different routes using Airflow as the orchestrator of our pipeline. Data is scraped from open-source websites and enriched with data from the Reddit API. Natural Language Processing (NLP) techniques are then used to extract information from user’s posts and comments regarding their experience. The final data is then stored in a graph database, Neo4j.A graph database was used to establish relationships between routes, regions and features. This can help find similarities between routes and suggest alternatives based on user preferences.

Data sources

For this project we utilize 2 datasources:

- We used a website specialized in hikings that provided information on different routes, besthikesbc.ca, providing us with information such as:

- Hike Name

- Ranking

- Difficulty

- Distance (KM)

- Elevation Gain (M)

- Gradient

- Time (Hours)

- Dogs

- 4x4

- Season

- Region

- We use Reddit publications for data enrichment. The

Reddit APIwas accessed using the Python library called PRAW, providing us with information such as:- Title

- Upvotes

- Comments

Additionally, we created “.csv” files that will help us identify specific keywords for later use with Natural Language Processing (NLP) techniques in the Reddit comments:

- Animals.csv

- Danger.csv

- Landscapes.csv

- Nature.csv

- Structures.csv

- Weather.csv

Ingestion

In this phase we take care of recovering the data from besthikesbc.ca, first of all we check by pinging Google to see if we have a connection. If the result is positive, we will extract the data to the mentioned page.

-

We make an HTTP request to get the content of the page. Then, we employ the

BeautifulSoup libraryto parse the HTML and extract information from the table of our interest.the table data is organized in a dictionary format and converted to JSON , we will save thisJSONin a file named'data_hiking.json'in a specific directory. -

The next step is to load the data from a JSON file named ‘data_hiking.json’ into a

MongoDBcollection, we check if a collection named ‘hikes’ already exists in the MongoDB database, if it does, we delete it to ensure an up-to-date data insertion.

In case the connection gives a negative result.

- We perform data loading with local records.

It is important to note that the lines related to the storage in a Redis database were removed since the use of this technology was not necessary for the work we are initially proposing, We also considered using the Postgres database but we decided that the database that best suited our needs was MongoDB since the information collected in it was only one table, so we preferred the use of a NoSQL,

Staging

Cleansing

During the Cleansing process, we focused on determining which fields would be helpful in addressing the questions initially posed. As a result, columns such as:

- Distance (KM)

- Elevation Gaom (M)

- Gradient

- Dogs

- 4x4

- Season

Transformations

We also had the initiative to make transformations to certain fields (Ranking and Time (Hours)) but we decided that the initial form of the data gave a better understanding.Only a small modification was necessary. During the extraction process on the web page, a small problem related to the lack of a space inside the string in the ‘Time (Hours)’ column was corrected.

Enrichments

In this section, we make the most of the data collected from Reddit posts.

From our database in MongoDB, where we store information from pages specialized in hiking, we make a call to the Reddit API using the "Hike Name" field, thus obtaining the posts related to hiking.

Subsequently, we will use Natural Language Processing (NLP) techniques to identify the keywords of the different topics that interest us and the frequency with which they are found in various publications. Likewise, we will carry out a sentiment analysis corresponding to the comment in which the keyword was found.

Production

The production graph database is structured in such a way that each hike is its own node. It can be connected to many of the following node types:

- Difficulty (Very Easy, Easy, Moderate, Difficult, Very Difficult): An expression of the difficulty of the hike.

- Keyword: Key words extracted by Natural Language Processing from Reddit posts. One hike can be connected to many keywords.

- Ranking: Ranges from 1 to 5 stars.

- Region: The region where the hike is located.

- Time: The best time of day to perform the hike.

- Keyword_avg

Keyword and Keyword_avg are the most interesting of these Nodes since, not only do they come from analyzing the posts, but they carry the sentiment of each post content and comments, creating a new node for each keyword found. It repeats if found more than once, as each one carries a sentiment. In the end, a pattern match is run to compute the average sentiment of one keyword’s sentiment, creating new nodes called {keyword}_avg of type “Keyword_avg” to contribute to the final analysis of the graph.

Example Queries

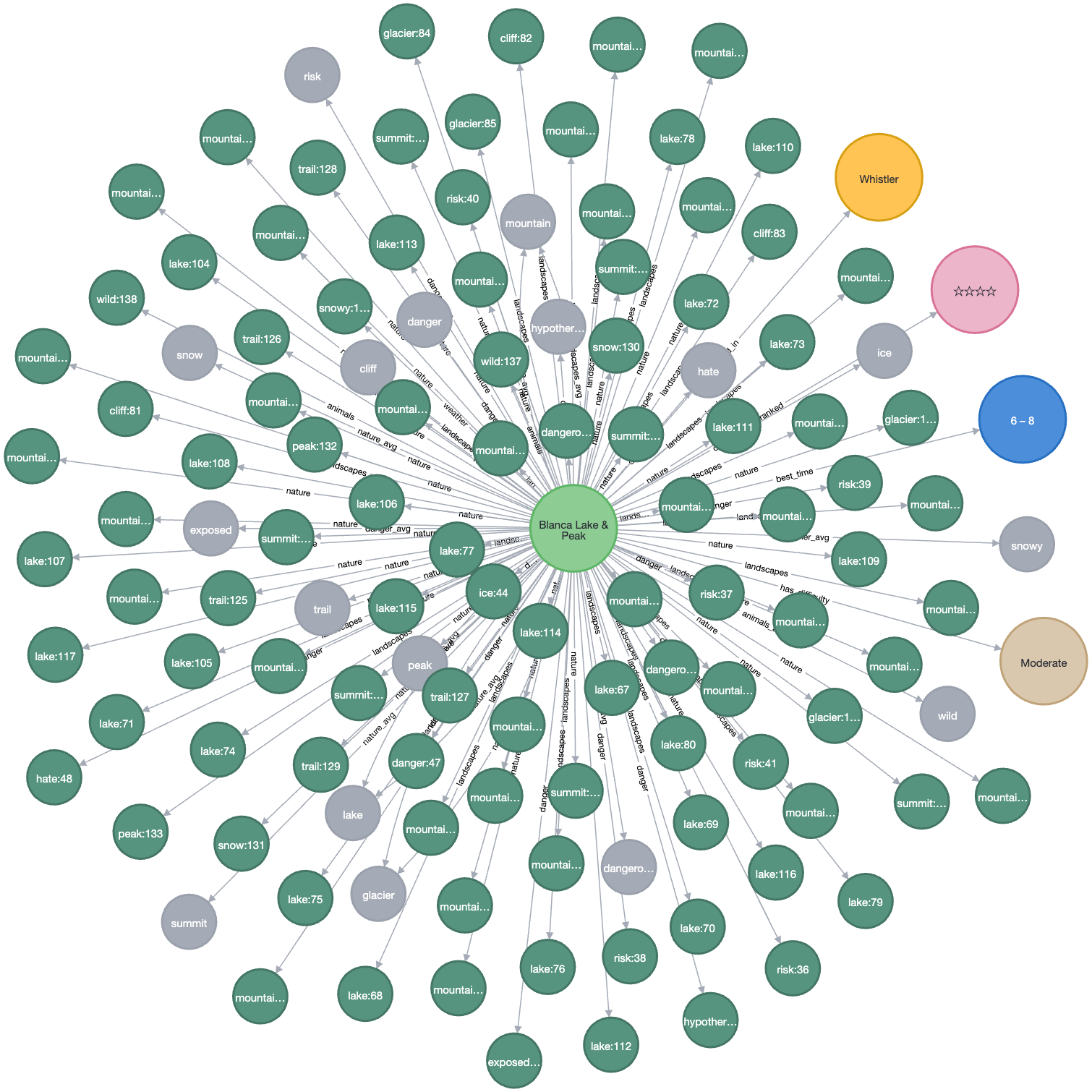

1. Retrieve all Connected Nodes for a Hike:

MATCH (hike:Hike {name: 'Blanca Lake & Peak'})-[*1]-(connectedNode)

RETURN hike, connectedNode;

This query retrieves all nodes connected to the ‘Blanca Lake & Peak’ hike, regardless of relationship type.

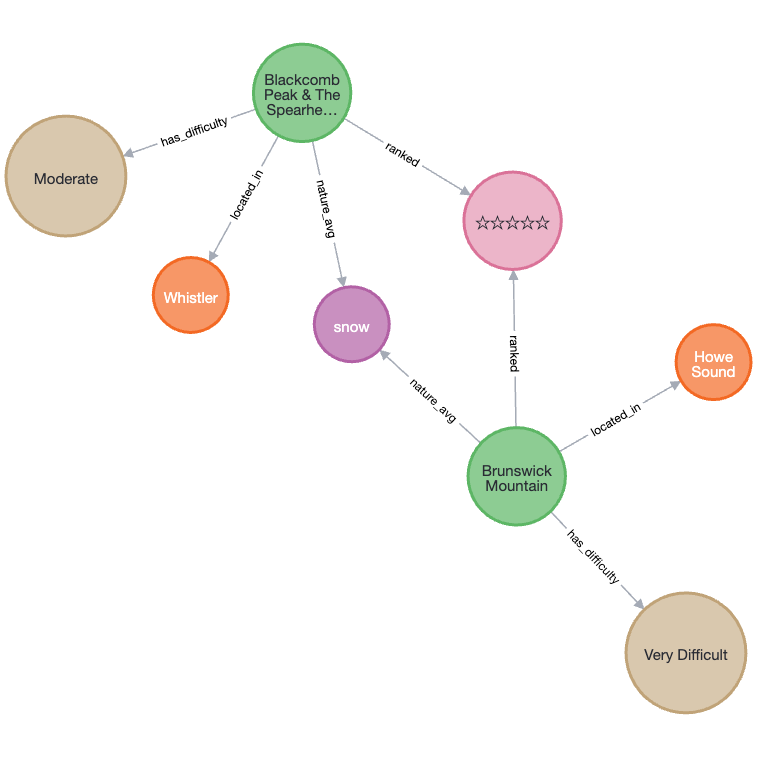

2. Identify the Best Snow Hikes in Different Regions:

MATCH (hike:Hike)-[:located_in]->(region:Region)

MATCH (hike)-[:has_difficulty]->(difficulty:Difficulty)

MATCH (hike)-[:nature_avg]->(avgKeyword:Keyword_avg {name: 'snow'})

MATCH (hike)-[:ranked]->(ranking:Ranking {value: "☆☆☆☆☆"})

RETURN hike, difficulty, region, avgKeyword, ranking;

This query provides us with the best snowy hiking trails in various regions based on comments from Reddit users. In addition, the rating and difficulty level of each route is included. To recommend the most outstanding routes where you can enjoy snow and know which regions they belong to, “Blackcomb Peak & The Spearhead” or “Brunswick Mountain” are suggested. The choice between the two will depend on the desired level of difficulty, as both routes have a 5-star rating.